2. Mathematical Foundations¶

This chapter covers the mathematical foundations for digital circuit design. Most importantly, we introduce binary numbers and Boolean algebra. Furthermore, we discuss methods to determine the extrema of functions for use in the context of minimizing the delay of a circuit, including arithmetic and geometric means, root finding for polynomials, and elementary calculus.

2.1. Numbers¶

Digital circuits use voltages to encode information including numbers. Among various alternatives, binary numbers have emerged as the best match for digital circuits. Athough the encoding of binary numbers with low and high voltages differs from our familiar decimal number system, the underlying principles are essentially the same. In the following, we discuss the representation and manipulation of numbers in the binary number system.

2.1.1. Positional Number Systems¶

We are all familiar with numbers as a concise abstraction for counting concrete quantities like two apples or seven oranges. Most of us associate a magnitude with a number like 4362. If you think this number is large, it’s perhaps because you are just wondering about how big a pile of 4362 apples might be. If, on the other hand, you are saving money for a trip into space, then 4362 Euros are too little to get you even close. Deeper thoughts about number 4362 reveal that it is a juxtaposition of the four digits 4, 3, 6, and 2, with an order that matters. Each digit counts a quantity. Digit 2 counts ones, digit 6 counts tens, digit 3 counts hundreds, and digit 4 counts thousands. The value of the number 4362 is actually the sum of the counts:

\(4362\ =\ 4 \cdot 1000 + 3 \cdot 100 + 6 \cdot 10 + 2 \cdot 1\,.\)

Here is another number with the same value 4362:

These Babylonian cuneiform symbols may look unfamiliar, but

the underlying rules to determine the value of the number are

identical. The symbols count quantities. Symbol denotes

1, symbol

denotes 12, and

denotes 42. Given

the order of the three symbols, the rightmost symbol

counts

ones, symbol

counts sixties, and symbol

counts

threethousandsixhundreds. The value of the number is the sum of the

counts:

Fourthousand years ago, a Babylonian might have looked at the number,

and would have been in awe about a pile of apples just

like we are about a pile of 4362 apples today.

We do not suggest that digital circuits are capable of imagining how big a pile of

\(1\,0001\,0000\,1010\)

apples might be. But, this number has value 4362 as well, and the value can be derived by the same counting rules. Each digit 0 or 1 counts quantities that are powers of 2:

\[\begin{eqnarray*} 1\,0001\,0000\,1010 &=& 1 \cdot 4096 + 0 \cdot 2048 + 0 \cdot 1024 + 0 \cdot 512 + 1 \cdot 256 + 0 \cdot 128 + 0 \cdot 64 + 0 \cdot 32 + 0 \cdot 16 + 1 \cdot 8 + 0 \cdot 4 + 1 \cdot 2 + 0 \cdot 1 \\ &=& 4362\,. \end{eqnarray*}\]

The three numbers above represent value 4362 in three different forms with a common set of rules known as positional number system. The Babylonians were among the first to use a positional number system.[1] They were concerned about recording their number symbols with a stylus on clay tablets. Our familiar ten digits \(0, 1, 2, \ldots, 9\) seem to have their origins in counting with ten fingers or perhaps toes. In contrast, bits 0 and 1 are abstract representations of low and high voltages in digital circuits.

In a positional number system, number \(N\) is a juxtaposition of digits, optionally with a radix point to separate the integral and fractional parts:

Number \(N\) has an integral part of \(q\) digits \(a_i\) in position \(i,\) where \(0 \le i < q,\) and a fractional part of \(p\) digits with position \(i\) in range \(-p \le i < 0.\) The number system has an implicit base \(b.\) Digit \(a_i\) in position \(i\) is in range \(0 \le a_i < b.\) A number system with base \(b\) has \(b\) digits. Leftmost digit \(a_{q-1}\) is the most significant digit of number \(N,\) and rightmost digit \(a_{-p}\) is the least significant digit. The least significant digit of integral numbers is \(a_0,\) because they do not have a fractional part. The value of number \(N\) is the value of the polynomial:

The positional notation is a convenient shorthand for this polynomial where the digits are the counts of the powers of base \(b.\) We say that \(b^i\) is the weight of digit \(a_i.\) Our familiar decimal number system has base \(b=10\) with ten digits \(0, 1, \ldots, 9.\) Therefore, the value of integer number 4362 is

\(4362 = 4 \cdot 10^3 + 3 \cdot 10^2 + 6 \cdot 10^1 + 2 \cdot 10^0\,.\)

Analgously, the Babylonian number system is sexagesimal with base

\(b=60.\) Thus, the Babylonians needed sixty digits. They did

not invent sixty different hieroglyphs, but used just two different

cuneiform shapes, the wedge and the chevron

, to

compose the digits. By associating the chevron with value 10, we find

that symbol

denotes value 12, because one chevron and two

wedges have value \(10 + 1 + 1 = 12.\) Similarly,

has

four chevrons and two wedges with value \(4 \cdot 10 + 1 + 1 =

42.\) In fact, the Babylonians mixed a decimal system for the digits

into the sexagesimal number system, such that

The binary number system with base \(b=2\) has two digits, bits 0 and 1. It is the positional number system with the fewest digits and the longest strings of digits to represent a number, including

\(1\,0001\,0000\,1010\ =\ 1 \cdot 2^{12} + 0 \cdot 2^{11} + 0 \cdot 2^{10} + 0 \cdot 2^9 + 1 \cdot 2^8 + 0 \cdot 2^7 + 0 \cdot 2^6 + 0 \cdot 2^5 + 0 \cdot 2^4 + 1 \cdot 2^3 + 0 \cdot 2^2 + 1 \cdot 2^1 + 0 \cdot 2^0\ =\ 4362\,.\)

When implementing circuits to store and manipulate numbers, a key issue is the number of digits that our circuits can support. More specifically, we may ask how many digits \(q\) we need to represent integral number \(N.\) In a positional number system with base \(b,\) the answer is the smallest \(q\) such that \(N < b^q.\) In terms of the logarithm to base \(b\) [2] and the ceiling function, the smallest number of digits to represent integer \(N\) is

Conversely, we may ask how many integral numbers we can represent with \(q\) digits. In a positional number system with base \(b,\) the answer is \(b^q\) numbers in range \([0, b^q - 1].\) This bracket notation lists the smallest integer 0 and the largest integer \(b^q - 1\) of the range. The examples below illustrate these insights.

Determine the number of digits \(q\) that we need to represent number \(N = 89\) in the decimal and the binary number systems.

By default, we interpret number \(N=89\) as a decimal number with base \(b=10,\) because this is how we were trained to interpret numbers. Since \(N=89\) has two digits, namely 8 and 9, we obviously need \(q=2\) digits to represent \(N\) as a decimal number. We confirm this trivial observation by checking that \(89 < b^q = 10^2 = 100.\) We conclude that we do not need 3 digits to represent 89. On the other hand, if we had 1 digit only, then \(10^1 = 10 < 89\) violates the condition \(N < b^q.\) Therefore, 1 digit is not sufficient to represent 89. Our formula \(q = \lceil \log_b N\rceil = \lceil \log_{10} 89\rceil\) produces the same result \(q = 2\) when evaluated blindly.

If we wish to represent \(N=89\) as a binary number with base \(b=2,\) the formula yields \(q = \lceil \log_2 89\rceil = 7,\) that is we need 7 bits to represent 89 as a binary number. Alternatively, we could have used a trial-and-error strategy to find the smallest power of 2 such that \(N < 2^q.\) Pick \(q = 4,\) and we find that \(q=4\) does not qualify, because \(2^4 = 16\) is not larger than \(N=89.\) Similarly, \(q=6\) yields \(2^6 = 64,\) which is still smaller than 89. The next larger value \(q = 7\) produces \(2^7 = 128,\) which is larger than 89, indeed. Since 7 is the smallest \(q\) such that \(89 < 2^q,\) we need 7 bits to represent 89 as a binary number.

How many integral numbers can we represent with \(q=8\) digits in the decimal and binary number systems?

We know that we can represent \(b^q\) numbers in range \([0,b^q-1]\) in a positional number system with base \(b.\) Thus, \(q=8\) digits in the decimal number system are good for \(10^8\) = 100,000,000 numbers. We can enumerate these numbers as a sorted list:

The smallest number of the list is \(0000\,0000 = 0,\) and the largest is \(9999\,9999 = 10^8 - 1.\) The bracket notation summarizes this range of integers compactly as \([0, 10^8-1].\)

In the binary number system, \(q=8\) digits or bits can represent only \(b^q=2^8=256\) numbers in range \([0,b^q-1]=[0,255].\) The sorted list of 8-bit binary numbers is:

The smaller the base of a number system is, the smaller is the number range that we can represent with a given number of digits \(q.\)

We may invent a new positional number system by choosing a base and symbols for the digits. The hexadecimal number system is a relatively new number system that gained traction in the \(20^{th}\) century when computer technology emerged. The base of the hexadecimal system is \(b=16.\) Thus, we need sixteen digits. Since the 10 decimal digits are not enough, the hexadecimal system borrows the first six letters of the alphabet as symbols for the digits beyond 9:

hexadecimal digit 0 1 2 3 4 5 6 7 8 9 A B C D E F decimal value 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

The value of hexadecimal number CAFE is

and the FBI has value

if we insist on reading letter I as number 1.

We need \(q = \lceil \log_{16} 89\rceil = 2\) hexadecimal digits to represent number 89, just as many as decimal digits. However, the same number of hexadecimal digits enable us to represent many more numbers than in the decimal system. For comparison with Example 2.2, hexadecimal numbers with \(q = 8\) digits can represent \(b^q = 16^8 = 4{,}294{,}967{,}296\) numbers in range \([0,16^8-1].\)

2.1.2. Number Conversion¶

Number conversion is the process of changing the representation of a number from one number system into another. The value of the number is not affected. For the sake of clarity, we make the system of number \(N\) explict by annotating the base as a subscript, as in \(N_b.\) For example, we interpret \(11_{10}\) as decimal number eleven, and \(1011_2\) as binary number with value eleven. Thus, equality \(11_{10} = 1011_2\) makes sense whereas \(11 = 1011\) does not.

Converting a number from any positional number system into decimal is easy, because we are used to decimal arithmetic. All we need to do is evaluate the polynomial expression of the number. This process is called positional conversion. For example, converting binary number \(1011_2\) into decimal yields:

Converting a decimal number into another system requires a little more work. The conversion algorithm is called successive division, and works as follows: Initially, find the largest power \(p\) of base \(b\) less than or equal to \(N_{10}.\) Then, divide \(N_{10}\) by \(b^p,\) record quotient \(Q = N_{10}/b^q\) as digit \(a_p = 1\) in position \(p,\) and repeat the division with remainder \(R = N_{10} - Q \cdot b^p\) and next smaller position \(p-1\) until the remainder equals zero. For example, let us convert \(11_{10}\) from decimal to binary. The largest power of 2 less than or equal to \(11\) is \(8 = 2^3.\) Therefore, bit \(a_3 = 1.\) The remainder is \(R = 11 - 8 = 3.\) Dividing 3 by the next smaller power of 2, i.e. \(2^2 = 4,\) yields quotient \(3/2^2=0\) and remainder 3, so that \(a_2 = 0.\) The next smaller power of 2 is \(2^1 = 2.\) Since the division \(3/2^1\) yields quotient 1 and remainder 1, we find \(a_1 = 1,\) and continue the division with remainder 1. The next smaller power is \(2^0 = 1,\) which yields quotient \(1 / 2^0 = 1\) and remainder 0. Thus, bit \(a_0 = 1,\) and the successive division terminates. As a result, we have determined the bits of the binary number \(a_3 a_2 a_1 a_0 = 1011_2,\) or, in polynomial form:

Converting decimal number \(89_{10}\) into the hexadecimal system of Example 2.3 works analogously. The largest power of base 16 less than or equal to 89 is \(16^1 = 16,\) because \(16^2 = 256\) is larger than 89. The division \(89/16\) yields quotient \(a_1 = 5\) and remainder \(9.\) Thus, digit \(a_0 = 9,\) and we obtain \(89_{10} = 59_{16}.\)

An alternative successive division algorithm saves us the initial step of finding the largest power of base \(b,\) and produces the digits in the opposite order from least significant to most significant digit. Rather then recording quotients, this algorithm records remainders. We write the integer division of numbers \(X\) and \(Y\) as

meaning that \(X = Q \cdot Y + R.\) Then, the successive division for converting decimal number \(N_{10}\) into \(N_b\) in a system with base \(b\) works as follows. Divide \(N_{10}\) by base \(b,\) and record the remainder as digit \(a_0 = r.\) Repeat the division with quotient \(Q\) until \(Q\) equals zero. As an example, we convert \(11_{10}\) into a binary number once again:

The remainder of the first division is the least significant bit \(a_0 = 1.\) The next steps yield bits \(a_1 = 1,\) \(a_2 = 0,\) and \(a_3 = 1\) from the remainders. Since the quotient of the fourth division equals zero, the algorithm terminates. Thus, we find that \(11_{10} = 1011_2,\) as expected.

Why does successive division work correctly? Given decimal number \(N_{10}\) and base \(b\) of our target number system. We wish to convert \(N_{10}\) into \(N_b.\) The representation of \(N_b\) in polynomial form is

The conversion determines \(N_b\) such that \(N_{10} = N_b.\) Integer division by \(b\) yields the equality

Remainder \(R\) is digit \(a_0\) of the target number system with base \(b.\) Quotient \(Q\) is a polynomial of degree \(q-2,\) which is by one degree smaller than the original polynomial of degree \(q-1.\) Thus, if we divide the quotient successively, the degree of the polynomial decreases from step to step and the algorithm terminates when the quotient equals zero. The remainder of step \(k,\) where \(k \ge 0,\) is digit \(a_k\) of number \(N_b = a_{q-1} \ldots a_1 a_0.\)

Knowing how to convert numbers from any number system into decimal and vice versa permits converting numbers between any two systems using the decimal number as intermediate result. Some conversions are much simpler, however, because they do not require any arithmetic except converting individual digits. Conversions between the hexadecimal and binary systems fall into this category. The conversion process is called bit grouping and relies on the fact that one hexadecimal digit covers the same range of numbers as four bits do. Recall that the value of one hexadecimal digit is in range \([0,16^1-1] = [0,15].\) A binary number of \(q=4\) bits covers the same range \([0,2^4-1] = [0,15].\) Bit grouping exploits this one-to-one correspondence between one hexadecimal digit and four bits.

To convert a hexadecimal number into a binary number, all we need to do is convert each individual hexadecimal digit into its binary representation and juxtapose the resulting bit quadruples. For example, here is the conversion of hexadecimal number \(FB7_{16}\) into binary:

You may simply remember that \(F_{16} = 15_{10} = 1111_2,\) or use the decimal representation as intermediate result for the conversion. Analogously, the conversion of the other two digits yields \(B_{16} = 11_{10} = 1011_2\) and \(7_{16} = 7_{10} = 0111_2.\)

Bit grouping applies to the reverse conversion from binary to hexadecimal as well. Starting at the least significant bit, group the bits into quadruples, convert each 4-bit number into a hexadecimal digit, and juxtapose the hexadecimal digits. As an example, we convert binary number \(1111\,1011\,0111_2\) back into hexadecimal:

You can check that both representations have the same value as decimal number 4023.

The conversion of numbers with a fractional part can be split into the conversion of the integral part as described above and the fractional part. The method of positional conversion applies directly to fractions. For example, if we wish to convert binary fraction \(0.1101_2\) into a decimal fraction, we evaluate the polynomial:

For the conversion of a decimal fraction into another number system with base \(b,\) we may use an algorithm known as successive multiplication. It works in successive steps as follows. For step \(k,\) we are given fraction \(F^{(k)},\) initially \(F^{(1)} = N_{10}.\) Compute the product \(N^{(k)} = F^{(k)} \times b.\) The integral part of \(N^{(k)}\) is fractional digit \(a_{-k}.\) The fractional part of \(N^{(k)}\) is fraction \(F^{(k+1)}\) for the next step. Terminate if \(F^{(k+1)}\) equals zero. For example, here is the conversion of \(0.8125_{10}\) into a binary number:

In step \(k=1,\) we multiply \(F^{(1)} = 0.8125_{10}\) with base \(b=2\) to produce \(N^{(1)} = 1.6125.\) The integral part of number \(N^{(1)}\) is bit \(a_{-1} = 1.\) The fractional part \(0.625\) serves as input \(F^{(2)}\) for step 2. Analogously, the remaining steps produce \(a_{-2}=1,\) \(a_{-3} = 0,\) and \(a_{-4} = 1,\) and we find that \(0.8125_{10} = 0.1101_2.\)

Successive multiplication converts decimal fraction \(F_{10}\) into fraction \(F_b\) to base \(b.\) In polynomial form, we have

The conversion shall produce \(F_b\) such that \(F_{10} = F_b.\) Multiplying this equation by \(b,\) we obtain

The multiplication yields a number with an integral and a fractional part, which allows us to extract digit \(a_{-1}\) as the integral part of the product. Therefore, in step \(k\) of the successive multiplication, where \(k \ge 1,\) the integral part of the product is digit \(a_{-k}\) of the fraction \(F_b = 0.a_{-1} a_{-2} \ldots a_{-p}.\) If the number of digits \(p\) of the fractional part is finite, the successive multiplication terminates when the fractional part is reduced to zero.

The successive multiplication algorithm has one pitfall. It may not terminate, because not all finite decimal fractions have a finite representation in another number system. For example, if we wish to represent fraction \(0.1_{10}\) as a binary number, the first six steps of the successive multiplication are:

Note that \(F^{(6)} = F^{(2)},\) that is the successive division enters a periodic cycle. In fact, \(0.1_{10} = 0.0\overline{0011}_2\) is a periodic number, where bit quadruple \(0011\) repeats infinitely often, and \(p = \infty.\) In general, the question whether the successive multiplication algorithm terminates is an instance of the famous halting problem. In case of number conversion, we may circumvent the halting problem by stopping the algorithm when the fraction has reached a reasonable precision.

2.1.3. Binary Numbers¶

Binary numbers are the butter on the bread of digital circuit designers. There are \(2^q\) integral binary numbers with \(q\) bits in range \([0,2^q-1].\) This table lists the binary numbers with up to 4 bits:

decimal q=1 q=2 q=3 q=4 0 0 00 000 0000 1 1 01 001 0001 2 10 010 0010 3 11 011 0011 4 100 0100 5 101 0101 6 110 0110 7 111 0111 8 1000 9 1001 10 1010 11 1011 12 1100 13 1101 14 1110 15 1111

Since the polynomial for binary numbers sums up powers of 2, it is handy to memorize the values of the first powers of 2:

\(2^0\) \(2^1\) \(2^2\) \(2^3\) \(2^4\) \(2^5\) \(2^6\) \(2^7\) \(2^8\) \(2^9\) \(2^{10}\) \(2^{11}\) \(2^{12}\) 1 2 4 8 16 32 64 128 256 512 1024 2048 4096

Knowing a few powers of 2 and the exponent rule:

enables you to quickly compute those powers of 2 that you tend to forget. For example, if you need to know the value of \(2^6\) and remember that \(2^5 = 32,\) then you can derive \(2^6\) by computing \(32 \times 2 = 64,\) because \(2^6 = 2^{5+1} = 2^5 \times 2^1 = 32 \times 2.\) If you cannot remember the value of \(2^9\) but do remember that \(2^{10} = 1024,\) then the exponent rule yields \(2^9 = 2^{10 - 1} = 2^{10} \times 2^{-1} = 1024\, /\, 2 = 512\) in a twinkling of an eye. At this point, converting a binary number like \(10\,0100\,0001_2\) into decimal by positional conversion is faster in your head than you can type into a calculator. The polynomial evaluation reduces to adding three powers of 2 in positions 0, 6, and 9: \(2^0 + 2^6 + 2^9 = 1 + 64 + 512 = 577.\)

The powers of 2 appear ubiquitously in computer technology. In particular, large powers of 2 occur frequently these days. For example, some of us brag about their TeraByte harddisk in their PetaFlop computer. The first powers of \(2^{10}\) are commonly referred to by their names of Greek origin:

Power of 2 Name Value \(2^{10}\) Kilo 1,024 \(2^{20}\) Mega 1,048,576 \(2^{30}\) Giga 1,073,741,824 \(2^{40}\) Tera 1,099,511,627,776 \(2^{50}\) Peta 1,125,899,906,842,624 \(2^{60}\) Exa 1,152,921,504,606,846,976 \(2^{70}\) Zetta 1,180,591,620,717,411,303,424 \(2^{80}\) Jotta 1,208,925,819,614,629,174,706,176

These are the same names as used for the powers of thousands in the decimal system, where \(10^3\) = 1 Kilo, \(10^6\) = 1 Mega, \(10^9\) = 1 Giga, and so on, but the values differ. The power of 2 is slightly larger than the corresponding power of 10.

When working with binary numbers it is convenient to refer to specific bits or groups of bits as a whole. A group of 8 bits is called a byte:

The most significant bit (msb) and the least significant bit (lsb) of a byte are the leftmost and rightmost bits, respectively, for example:

A group of 4 bits is called a nibble:

Since a 4-bit number can be represented as one hexadecimal digit, one byte can be represented with two hexadecimal digits, one hexadecimal digit per nibble. For example, in \(1011\,0110_2 = B6_{16}\) the most significant nibble is converted into hexadecimal digit \(B_{16}\) and the least significant nibble into \(6_{16}.\)

For readability, we may prefer representing larger binary numbers in hexadecimal format. For example, hexadecimal number

has eight digits, each of which is associated with one nibble or four bits, and the corresponding binary number is a 32-bit number. In this context, the MSB denotes the most significant byte and the LSB the least significant byte. Both consist of two nibbles or two hexadecimal digits. The most significant nibble \(D_{16}\) of the MSB is also the most significant nibble of the 32-bit number, and the least significant nibble \(F_{16}\) of the LSB is the least significant nibble of the 32-bit number.

We study some connections between sets and binary numbers, starting with set \(\mathcal{B} = \{0, 1\}\) of binary values.

- Derive the cardinality of product set \(\mathcal{B}^4.\)

- Determine the decimal values of the set of unsigned binary numbers represented by \(\mathcal{B}^4.\)

- Determine the range of the unsigned binary numbers represented by \(\mathcal{B}^4.\)

- Represent the unsigned binary numbers of \(\mathcal{B}^4\) as a polynomial.

- Determine the value of unsigned binary numbers \(0011_2\) and \(1001_2\) using the polynomial representation.

The product set of two sets is the set of all ordered pairs, whose \(1^{st}\) element is from the \(1^{st}\) set and the \(2^{nd}\) element from the \(2^{nd}\) set. Given set \(\mathcal{B},\) the product set of \(\mathcal{B}\) with itself is

\[\mathcal{B}^2 = \mathcal{B} \times \mathcal{B} = \{ 0, 1 \} \times \{ 0, 1\} = \{ (0,0), (0,1), (1,0), (1,1)\}\,.\]Given \(\mathcal{B}^2,\) we obtain \(\mathcal{B}^4\) by forming the product set of \(\mathcal{B}^2\) with itself:

\[\begin{eqnarray*} \mathcal{B}^4 &=& \mathcal{B}^2 \times \mathcal{B}^2 \\ &=& \{ (0,0), (0,1), (1,0), (1,1)\} \times \{ (0,0), (0,1), (1,0), (1,1)\} \\ &=& \{ (0,0,0,0), (0,0,0,1), (0,0,1,0), (0,0,1,1), (0,1,0,0), (0,1,0,1), (0,1,1,0), (0,1,1,1), (1,0,0,0), (1,0,0,1), (1,0,1,0), (1,0,1,1), (1,1,0,0), (1,1,0,1), (1,1,1,0), (1,1,1,1) \} \end{eqnarray*}\]To save parentheses, we write the elements of \(\mathcal{B}^4\) as quadruples rather than as pairs of pairs.

The cardinality of a set is the number of elements in the set. We write \(|\mathcal{B}|\) to denote the cardinality of set \(\mathcal{B}.\) Then, \(|\mathcal{B}| = 2,\) \(|\mathcal{B}^2| = 4,\) and \(|\mathcal{B}^4| = 16.\)

If we write the elements of set \(\mathcal{B}^4\) by dropping the commas and parentheses of the quadruple notation, we obtain strings of 0’s and 1’s as set elements:

\[\mathcal{B}^4_2 = \{ 0000, 0001, 0010, 0011, 0100, 0101, 0110, 0111, 1000, 1001, 1010, 1011, 1100, 1101, 1110, 1111 \}\,.\]Interpreting each set element as unsigned binary number, we obtain the decimal representation of \(\mathcal{B}^4\) by listing the first \(|\mathcal{B}^4| = 16\) decimal numbers in increasing order starting at 0:

\[\mathcal{B}^4_{10} = \{ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15 \}\,.\]The unsigned binary number in position \(i\) of set \(\mathcal{B}^4_2\) represents the decimal number in the same position of set \(\mathcal{B}^4_{10}.\)

A range specifies a set of consecutive numbers with its smallest and largest elements. In case of \(\mathcal{B}^4,\) we may write the decimal form of the set as range \(\mathcal{B}^4_{10} = [0, 15]\) and the binary form as the range

\[\mathcal{B}^4_2 = [0000, 1111]\,.\]Unsigned binary number \(N = a_{q-1} a_{q-2} \ldots a_0\) with \(q\) bits is represented by polynomial

\[N = \sum_{i=0}^{q-1} a_i 2^i\]with base \(b = 2.\) The elements of set \(\mathcal{B}^4_2\) have \(q=4\) bits each. Hence, element \(a_3 a_2 a_1 a_0\) of \(\mathcal{B}^4_2\) is represented by polynomial

\[N = a_3 \cdot 2^3 + a_2 \cdot 2^2 + a_1 \cdot 2^1 + a_0 \cdot 2^0\,.\]We determine the decimal values of binary numbers \(x = 0011_2\) and \(y = 1001_2\) by positional conversion:

\[\begin{eqnarray*} x &=& 0011_2 \\ &=& 0 \cdot 2^3 + 0 \cdot 2^2 + 1 \cdot 2^1 + 1 \cdot 2^0 \\ &=& 3_{10}\,. \end{eqnarray*}\]Analogously, we obtain

\[\begin{eqnarray*} y &=& 1001_2 \\ &=& 1 \cdot 2^3 + 0 \cdot 2^2 + 0 \cdot 2^1 + 1 \cdot 2^0 \\ &=& 9_{10}\,. \end{eqnarray*}\]

2.1.4. Negative Numbers¶

We distinguish between unsigned and signed binary numbers. The unsigned binary numbers with \(q\) bits we discussed so far are in range \([0,2^q-1],\) i.e. they are nonnegative. Signed binary numbers extend this range to cover both positive and negative numbers.

In our familiar decimal number system, we use the plus and minus signs as special symbols to denote positive and negative numbers. For example, number \(-5\) denotes a negative 5 by means of an explicit minus sign. Number \(+5\) uses an explict plus sign for a positive 5. Usually, we assume that the plus sign is implicit, though, that is we interpret number \(5\) as a positive 5. Number \(0\) is special because zero separates the positive from the negative numbers, and is independent of the sign.

The use of special symbols for the sign of a number is ill-suited for the implementation of signed binary numbers with digital circuits. Fortunately, the sign of number can have one of two values only, positive or negative. Therefore, we might be tempted to represent the sign with a single bit prepended to a binary number. For example, if we consider 4-bit binary numbers, we may interpret the most significant bit as the sign bit and the remaining three bits as the magnitude. If we interpret value 0 as plus sign and 1 as minus sign, then \(0101_2\) is a positive \(101_2 = 5_{10}\) and \(1101_2 = -5_{10}\) is a negative 5. In this signed magnitude notation there are two binary numbers that both represent zero, \(0000_2 = +0_{10}\) and \(1000_2 = -0_{10}.\) Having two representations for one number is undesirable. Complement number systems avoid this deficiency in the representation of signed numbers.

A complement number system negates a number by forming its complement. Different complement number systems depend on the particular choice of the complement. The radix complement \(\overline{N}_{b's}\) of a \(q\)-digit number \(N\) with base \(b\) is defined as

The name radix complement emphasizes its dependence on radix \(b,\) which is a synonym for base. The diminished radix complement \(\overline{N}_{b-1's}\) of a \(q\)-digit number \(N\) with base \(b\) is defined as

Obviously, the two complements are related such that

The complements exist for positional number systems of any base \(b.\) For example, for \(b=10\) and \(q=1\) we consider numbers that are the decimal digits. The radix complement is the 10’s complement \(\overline{N}_{10's} = 10 - N\) and the diminished radix complement the 9’s complement \(\overline{N}_{9's} = 9 - N.\) The 10’s complement of 3 is \(\overline{3}_{10's} = 10 - 3 = 7\) and the 9’s complement is \(\overline{3}_{9's} = 9 - 3 = 6.\) Note that the 10’s complement of 0 is \(\overline{0}_{10's} = 10 - 0 = 10\) is not a single digit but a 2-digit number, whereas the 9’s complements of all digits are digits themselves:

\(N\) \(0\) \(1\) \(2\) \(3\) \(4\) \(5\) \(6\) \(7\) \(8\) \(9\) \(\overline{N}_{9's}\) \(9\) \(8\) \(7\) \(6\) \(5\) \(4\) \(3\) \(2\) \(1\) \(0\) \(\overline{N}_{10's}\) \(10\) \(9\) \(8\) \(7\) \(6\) \(5\) \(4\) \(3\) \(2\) \(1\)

If we drop the most significant digit of the 10’s complement of 0, then the 10’s complement of 0 equals 0, and all digits have a unique single-digit complement. For numbers with \(q>1\) digits, the complements are computed analogously. For example, 4-digit number 4362 has 10’s complement \(\overline{4362}_{10's} = 10^4 - 4362 = 5638\) and 9’s complement \(\overline{4362}_{9's} = (10^4 - 1) - 4362 = 5637.\)

The corresponding radix complements for binary numbers are the 1’s complement and the 2’s complement. The 1’s complement is the diminished radix complement. For single bits, that is binary numbers with \(b=2\) and \(q=1,\) the 1’s complements are

When viewed as a logical function, the 1’s complement of a single bit is its complement or negation. This is straightforward to realize in a digital circuit, because we can use an inverter to compute the 1’s complement. The 2’s complements of a single bit are

At the first glance, the 2’s complements look useless. First of all, the 2’s complement of 0 is a 2-bit number. Secondly, if we drop the most significant bit, then the 2’s complement \(\overline{N}_{2's}\) equals \(N.\) However, this conclusion is deceptive. For binary numbers with \(q>1\) bits, the 2’s complement turns out to be better suited for representing signed numbers than the 1’s complement.

Consider using the 1’s complement to represent signed binary numbers by dedicating msb \(a_{q-1}\) as sign bit, and interpreting the value of the \(q\)-bit number \(N,\) where \(q > 1,\) such that

For example, the 3-bit numbers with \(q=3\) have 1’s complement \(\overline{N}_{1's} = (2^3-1)-N = 7-N.\) Thus, given binary number \(N = 010_2,\) we derive its value by inspecting sign bit \(a_{q-1} = a_2 = 0.\) Since the sign bit is 0, the value of the number is its magnitude \(V_{1's}(N) = N = 010_2 = 2_{10}.\) If the sign bit is 1, as in number \(N = 110_2,\) then we derive the magnitude of the negative number by forming its 1’s complement. Since \(N = 110_2 = 6_{10},\) we obtain the 1’s complement \(\overline{N}_{1's} = 7 - N = 7 - 6 = 1,\) and conclude that the value of \(N\) is \(V_{1's}(N) = -\overline{N}_{1's} = -1.\) The table below shows the values of all 3-bit binary numbers and their 1’s complements in binary and decimal formats, and their values if interpreted as signed binary numbers.

\(N_2\) \(\overline{N}_{1's,2}\) \(N_{10}\) \(\overline{N}_{1's,10}\) \(V_{1's}(N)\) 000 111 0 7 0 001 110 1 6 1 010 101 2 5 2 011 100 3 4 3 100 011 4 3 -3 101 010 5 2 -2 110 001 6 1 -1 111 000 7 0 -0

We observe that 1’s complement \(\overline{N}_{1's}\) of number \(N\) is the bit-wise 1’s complement. In a digital circuit, this is straightforward to implement with three inverters, and for \(q\)-bit numbers with \(q\) inverters. There is a beauty spot, however, because the signed values include two representations of zero, \(000_2 = +0_{10}\) and \(111_2 = -0_{10}.\) The 2’s complement does not have this problem.

When using the 2’s complement to represent signed binary numbers, we dedicate msb \(a_{q-1}\) as sign bit, and interpret the value of \(q\)-bit number \(N,\) where \(q > 1,\) as

Alternatively, we can express the signed value of binary number \(N\) using a polynomial with a negated msb:

This equivalent expression for \(V_{2's}(N)\) obviates the case distinction and may be easier to remember.

For 3-bit number \(N,\) the 2’s complement is \(\overline{N}_{2's} = 2^3 - N = 8 - N.\) The table below shows the values of all 3-bit binary numbers and their 2’s complements in binary and decimal formats, and their values if interpreted as signed binary 2’s complement numbers.

\(N_2\) \(\overline{N}_{2's,2}\) \(N_{10}\) \(\overline{N}_{2's,10}\) \(V_{2's}(N)\) 000 1000 0 8 0 001 111 1 7 1 010 110 2 6 2 011 101 3 5 3 100 100 4 4 -4 101 011 5 3 -3 110 010 6 2 -2 111 001 7 1 -1

Observe that the values are unique. In particular, there is only one representation of value zero. It is straightforward to show that the values of the signed 2’s complement numbers cover the range \([-2^{q-1}, 2^{q-1}-1].\) In the table above, the signed 3-bit numbers cover the range \([-2^2, 2^2-1] = [-4,3].\) The 2’s complement seems difficult to compute, although we will see that this is not the case when we rely on the equality \(\overline{N}_{2's} = \overline{N}_{1's} + 1.\) In a digital circuit, we use \(q\) inverters to form the 1’s complement. Then, adding constant 1 turns out be almost for free, because we can treat the 1 as the carry-in of an adder circuit. The 2’s complement is not without a beauty spot either. The 2’s complement of the \(q\)-bit zero has \(q+1\) bits. However, if we ignore the most significant 1, then the remaining \(q\) bits yield the desired value 0.

The 2’s complement format is the established standard for representing signed binary numbers in today’s computers. Thus, digital circuit designers need to know how to convert decimal numbers to 2’s complement format and vice versa. Nonnegative numbers are converted as described above. Negative numbers require forming the 2’s complement. As an example consider the conversion of the 4-bit 2’s complement number \(1101_2\) into decimal. The number is negative, because the sign bit is 1. We proceed in two steps:

- Form the 1’s complement: \(1101_2 \rightarrow 0010_2.\)

- Add 1 to the 1’s complement. Since \(0010_2 = 2_{10},\) we obtain \(2_{10} + 1_{10} = 3_{10}.\)

We conclude that the value of \(1101_2 = -3_{10}\) in decimal.

The opposite direction works analogously. For example, convert decimal number \(-6_{10}\) into 2’s complement format with \(q=4\) bits. The magnitude of the decimal number is \(6_{10} = 0110_2.\) Again, we proceed in two steps:

- Form the 1’s complement: \(0110_2 \rightarrow 1001_2.\)

- Add 1 to the 1’s complement. Since \(1001_2 = 9_{10},\) we obtain \(9_{10} + 1_{10} = 10_{10},\) or in binary format \(1010_2.\)

We conclude that the value of \(-6_{10} = 1010_2\) in binary 2’s complement format. The good news is that we need to remember just one method for both directions of the conversion of negative numbers, that is to form the 2’s complement.

Determine the decimal values of signed binary numbers \(A_2 = 1011_2\) and \(B_2 = 0101_2.\)

Consider \(A_2 = 1011_2\) first. The number has \(q = 4\) bits, and the sign bit, the msb, equals 1. Therefore, bit string \(1011\) represents a negative number. By default, we represent signed binary numbers in 2’s complement format. To determine the magnitude of a negative binary number, we form its 2’s complement:

- Form the 1’s complement: \(\quad A_2 = 1011_2 \rightarrow \overline{A}_{1's,2} = 0100_2.\)

- Add 1: \(\quad \overline{A}_{2's,2} = \overline{A}_{1's,2} + 1_2 = 0101_2.\)

Converting the 2’s complement \(\overline{A}_{2's,2}\) into decimal format yields the magnitude \(\overline{A}_{2's,10} = 5_{10}.\) Therefore, the decimal value of \(A_2\) is \(A_{10} = V_{2's}(A_2) = -5_{10}.\)

Now, consider \(B_2 = 0101_2.\) This binary number has \(q=4\) bits with a sign bit of 0. The value of a positive binary number in 2’s complement format is the value of the number interpreted as unsigned binary number. We may use positional conversion to determine the positive decimal value

As an alternative, we may compute the 2’s complement of a binary number by first converting its unsigned value into decimal format, and then applying the decimal arithmetic of the definition of the 2’s complement, \(\overline{N}_{2's} = 2^q - N.\) For negative binary number \(A_2 = 1011_2\) we form the 2’s complement in two steps:

Interpret \(A_2 = 1011_2\) as unsigned binary number, and convert to decimal:

\[A_{10u} = 1 \cdot 2^3 + 0 \cdot 2^2 + 1 \cdot 2^1 + 1 \cdot 2^0 = 11_{10}\,.\]Compute the 2’s comlement of 4-bit number \(A_2\):

\[\overline{A}_{2's} = 2^4 - A_{10u} = 16 - 11 = 5_{10}\,.\]

Since the 2’s complement \(\overline{A}_{2's}\) is the magnitude of the negative number, we obtain decimal value \(A_{10} = -5_{10}.\)

As a sanity check, we may verify that the decimal values are within the range of the 4-bit signed binary numbers. The signed binary numbers in 2’s complement format with \(q=4\) bits span number range \([-2^{q-1}, 2^{q-1}-1] = [-2^3, 2^3-1] = [-8,7].\) Thus, our decimal values \(A_{10} = -5\) and \(B_{10} = 5\) are within the range of 4-bit signed binary numbers.

Determine the representations of \(A_{10} = -53_{10}\) and \(B_{10} = +53_{10}\) as 8-bit signed binary numbers.

By default, we represent signed binary numbers in 2’s complement format. When needed, we refer to other representations, including 1’s complement or signed magnitude, explicitly. We note that both numbers, \(A_{10} = -53_{10}\) and \(B_{10} = +53_{10},\) can be represented with 8 bits, because they are within the range of 8-bit numbers in 2’s complement format: \([-2^{8-1},2^{8-1}-1] = [-128, 127].\)

To represent negative number \(A_{10} = -53_{10}\) as a signed binary number, we perform four steps:

- Convert the decimal magnitude to binary: \(\quad 53_{10} = 110101_2.\)

- Extend the magnitude with leading zeros into an unsigned 8-bit number: \(\quad A_{2u} = 0011\,0101_2.\)

- Form the 1’s complement: \(\quad \overline{A}_{1's} = 1100\,1010_2.\)

- Add 1: \(\quad \overline{A}_{2's} = 1100\,1011_2.\)

The resulting 2’s complement \(\overline{A}_{2's}\) is the signed binary representation of \(A_{10} = -53_{10} = A_2 = 1100\,1011_2.\) The msb of \(A_2\) serves as quick sanity check: it is the sign bit of \(A_2,\) and is equal to 1 as it should be for a negative number.

The representation of positive number \(B_{10} = +53_{10}\) as 8-bit signed binary number is easier to determine than that of a negative number, because we do not need to form the 2’s complement. Instead, we convert the decimal number into binary format and extend the number with leading zeros, as above:

- Convert decimal number to binary: \(\quad 53_{10} = 110101_2.\)

- Extend the number with leading zeros into an unsigned 8-bit number: \(\quad B_2 = 0011\,0101_2.\)

We observe that the resulting signed binary number \(B_2 = 0011\,0101_2 = +53_{10}\) is equal to intermediate unsigned 8-bit number \(A_{2u}\) in step 2 of the conversion of negative number \(A_{10} = -53_{10}.\) When representing a positive number as signed binary number, we save the 2’s complementation in steps 3 and 4 of the conversion procedure. The sign bit of \(B_2\) serves as sanity check: it is 0 as it should be for a positive number.

We practice number conversion between decimal and binary numbers.

- Convert unsigned binary number \(100101.101_2\) to decimal.

- Convert decimal number \(157.1875_{10}\) to unsigned binary.

- Determine the 4-bit 2’s complement representation of \(-3_{10}.\)

- Convert 5-bit signed binary number \(01011_2\) to decimal.

- Convert 5-bit signed binary number \(11011_2\) to decimal.

Use positional conversion to convert an unsigned binary number to decimal:

\[\begin{eqnarray*} 100101.101_2 &=& 1 \cdot 2^5 + 0 \cdot 2^4 + 0 \cdot 2^3 + 1 \cdot 2^2 + 0 \cdot 2^1 + 1 \cdot 2^0 + 1 \cdot 2^{-1} + 0 \cdot 2^{-2} + 1 \cdot 2^{-3} \\ &=& 32 + 4 + 1 + \frac{1}{2} + \frac{1}{8} \\ &=& 37.625_{10}\,. \end{eqnarray*}\]To convert decimal number \(157.1875_{10}\) to unsigned binary, we convert integral part \(157_{10}\) and fractional part \(0.1875_{10}\) separately. Use successive division for the integral part:

\[\begin{eqnarray*} 157\, /\, 2 &=& 78\ \text{rem}\ 1 \\ 78\, /\, 2 &=& 39\ \text{rem}\ 0 \\ 39\, /\, 2 &=& 19\ \text{rem}\ 1 \\ 19\, /\, 2 &=& 9\phantom{9}\ \text{rem}\ 1 \\ 9\, /\, 2 &=& 4\phantom{9}\ \text{rem}\ 1 \\ 4\, /\, 2 &=& 2\phantom{9}\ \text{rem}\ 0 \\ 2\, /\, 2 &=& 1\phantom{9}\ \text{rem}\ 0 \\ 1\, /\, 2 &=& 0\phantom{9}\ \text{rem}\ 1 \end{eqnarray*}\]Gather the remainder bits bottom up to obtain \(157_{10} = 10011101_2.\)

For the fractional part, we use successive multiplication:

\[\begin{eqnarray*} 0.1875\, \cdot\, 2 &=& 0.375 \\ 0.375\, \cdot\, 2 &=& 0.75 \\ 0.75\, \cdot\, 2 &=& 1.5 \\ 0.5\, \cdot\, 2 &=& 1.0 \end{eqnarray*}\]Gather the integral bits of the products top down to obtain \(0.1875_{10} = 0.0011_2.\)

Combining the integral and fractional parts, we find

\[157.1875_{10} = 10011101.0011_2\,.\]Decimal number \(-3_{10}\) is negative. Therefore, we want to form the 2’s complement of the binary magnitude. We procede in four steps:

- Convert the decimal magnitude to binary: \(\quad 3_{10} = 11_2\)

- Zero-extend the magnitude into an unsigned 4-bit number: \(\quad A_{2u} = 0011_2\)

- Form the 1’s complement: \(\quad \overline{A}_{1's} = 1100_2\)

- Add 1: \(\quad \overline{A}_{2's} = 1101_2\)

Thus, we find that \(-3_{10} = 1101_2\) in 4-bit 2’s complement representation.

Given 5-bit signed binary number \(01011_2,\) we assume a 5-bit 2’s complement representation. We observe that the sign bit (msb) has value 0. Therefore, we use positional conversion to obtain the positive decimal number:

\[\begin{eqnarray*} 01011_2 &=& 0 \cdot 2^4 + 1 \cdot 2^3 + 0 \cdot 2^2 + 1 \cdot 2^1 + 1 \cdot 2^0 \\ &=& 11_{10}\,. \end{eqnarray*}\]5-bit signed binary number \(A = 11011_2\) has a sign bit (msb) of value 1. Therefore, the number is negative, and we form the 2’s complement to determine the binary magnitude.

- Form 1’s complement: \(\quad \overline{A}_{1's} = 00100_2\)

- Add 1: \(\quad \overline{A}_{2's} = 00101_2\)

Next, we convert the 2’s complement to decimal, which gives us the decimal magnitude:

\[101_2 = 1 \cdot 2^2 + 0 \cdot 2^1 + 1 \cdot 2^0 = 5_{10}\,,\]and conclude that \(11011_2 = -5_{10}.\)

2.2. Boolean Algebra¶

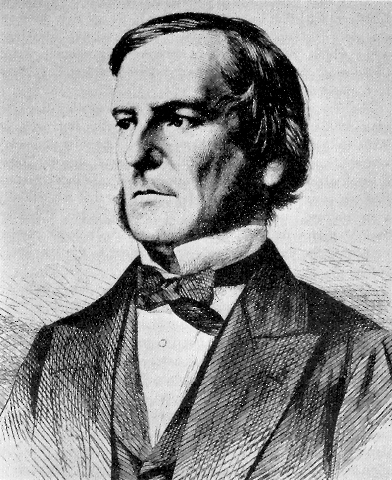

(1815-1864)

George Boole is the pioneer of the algebra we call Boolean algebra today. He introduced algebraic equations to express and manipulate logical propositions rigorously. The modern form of Boolean algebra serves as the primary mathematical tool for the design and analysis of digital circuits.

2.2.1. Axioms of Boolean Algebra¶

We bootstrap Boolean algebra by means of six axioms. Axioms have the form of equalities that we consider satisfied by definition. We postulate a digital domain of binary values \(\mathcal{B} = \{0, 1\},\) and three operations, AND (\(\cdot\)), OR (\(+\)), and NOT (overline \(\overline{\phantom{a}}\)). A Boolean variable represents a binary value, 0 or 1. Given Boolean variable \(x \in \mathcal{B},\) Table 2.1 lists the six axioms of Boolean algebra.

| Axiom | Dual Axiom | |

|---|---|---|

| Negation | \(\overline{0} = 1\) | \(\overline{1} = 0\) |

| Annihilation | \(x \cdot 0 = 0\) | \(x + 1 = 1\) |

| Identity | \(x \cdot 1 = x\) | \(x + 0 = x\) |

The negation axiom and its dual define the NOT operation. Negation excludes a third possibility: the negation of 0 is 1 and the negation of 1 is 0. The identity and annihilation axioms define the conjunction or AND operation. When it is clear from the context, we write the conjunction of Boolean variables \(x\) and \(y\) without the dot operator, such that \(x\,y = x \cdot y.\) Since Boolean variable \(x\) may assume value 0 or 1, each of the annihilation and identity axioms covers two cases that result in the following truth table, cf. AND Gate:

| \(x\) | \(y\) | \(x \cdot y\) | |

|---|---|---|---|

| 0 | 0 | 0 | by annihilation |

| 0 | 1 | 0 | by identity |

| 1 | 0 | 0 | by annihilation |

| 1 | 1 | 1 | by identity |

Analogously, the dual identity and annihilation axioms define the disjunction or OR operation, cf. OR Gate:

| \(x\) | \(y\) | \(x + y\) | |

|---|---|---|---|

| 0 | 0 | 0 | by identity |

| 0 | 1 | 1 | by annihilation |

| 1 | 0 | 1 | by identity |

| 1 | 1 | 1 | by annihilation |

The axioms and their duals are related through the principle of duality: If a Boolean equation is satisfied, then exchanging

- AND and OR operators, and

- 0’s and 1’s

yields a Boolean equation that is also satisfied. NOT operators remain unaffected by the principle of duality. To emphasize the distinction between an axiom and its dual, we also refer to the axiom as primal axiom. For example, applying the principle of duality to a dual axiom yields the primal axiom, which is the dual of the dual axiom.

In Boolean expressions with both AND and OR operators, AND has precedence over OR by convention. For example, \(x + y \cdot z\) means \(x + (y \cdot z)\) rather than \((x + y) \cdot z.\) If the association of operands and operators within an expression is unclear, we use explicit parenthesizations.

2.2.2. Switch Logic¶

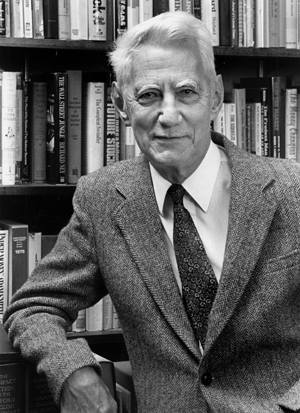

(1916-2001)

Claude Shannon discovered that Boolean algebra can be used to describe the behavior of electrical switching circuits. This insight earned him a master’s degree at MIT in 1937, and has been acclaimed as the most important master’s thesis of the \(20^{th}\) century.

Figure 2.1: Switch schematic (top) and compact form (bottom). The switch is closed if \(A = 1\) and open if \(A = 0.\)

In the following, we draw a switch compactly as two wire contacts around the signal name that controls the switch position, as shown in Figure 2.1. The switch connects or disconnects terminal nodes \(u\) and \(v.\) We assume that the switch is closed if the signal is 1 and open if the signal is 0. Thus, we may use Boolean variables, like \(A,\) to denote the control signal for the switch position. We introduce a switch function as an indicator of the switch position between nodes \(u\) and \(v\):

Since the switch position is a function of the control signal, we parameterize the switch function with the control signal. For the switch in Figure 2.1, the switch function is trivial:

If control signal \(A = 1,\) the switch is closed, and nodes \(u\) and \(v\) are connected. Otherwise, if \(A = 0,\) the switch is open, and nodes \(u\) and \(v\) are disconnected. This switch function models the behavior of an nMOS transistor with gate signal \(A.\)

The complemented switch in Figure 2.2 models the behavior of a pMOS transistor with gate signal \(A.\) If control signal \(A = 0,\) the complemented control signal is \(\overline{A}=1.\) The switch is closed and nodes \(u\) and \(v\) are connected. Otherwise, if control signal \(A = 1,\) the complemented control signal is \(\overline{A}=0,\) the switch is open, and nodes \(u\) and \(v\) are disconnected. The switch function of the complemented switch is

The Boolean AND and OR operations enable us to analyze switch networks by deriving an equivalent switch, analogous to the equivalent resistor, see Section Resistor Networks, and equivalent capacitance, see Section Capacitor Networks of an electrical network. The basic compositions of two switches are the series and parallel composition. If we are given two switches controlled by Boolean variables \(A\) and \(B\) and compose the switches in series, then the terminal nodes \(u\) and \(v\) are connected if and only if both switches are closed, see Figure 2.3. If at least one of the switches is open, then the terminals are disconnected. Therefore, two switches in series can be represented by an equivalent switch whose control signal is the conjunction \(A\, B.\)

Figure 2.3: Series composition of switches (left) and equivalent switch with control signal \(A\,B\) (right).

We can derive the switch function of the series composition using a Boolean AND of the switch functions. Figure 2.3 on the left shows two uncomplemented switches with switch functions \(\sigma_{u,w}(A) = A\) and \(\sigma_{w,v}(B) = B.\) The conjunction yields the switch function of the series composition:

The switch function of the series composition expresses the connectivity of nodes \(u\) and \(v\) without reference to node \(w.\) In fact, inner node \(w\) is irrelevant for the abstract switch behavior between nodes \(u\) and \(v.\) This type of abstraction is key to modularization and hierarchical circuit design with subcircuits hidden in black boxes.

If we compose two switches controlled by Boolean variables \(A\) and \(B\) in parallel, then the terminals are disconnected if and only if both switches are open. If at least one switch is closed, then the terminals are connected. The equivalent switch is controlled by the disjunction \(A+B\) shown in Figure 2.4.

Figure 2.4: Parallel composition of switches (left) and equivalent switch with control signal \(A + B\) (right).

The switch function of the parallel composition of two uncomplemented switches with switch functions \(\sigma_{u,v}(A) = A\) and \(\sigma_{u,v}(B) = B\) is their disjunction:

The concept of an equivalent switch facilitates transforming a series-parallel switch network into a single switch controlled by a Boolean expression that represents the original switch network. Conversely, we may transform a switch with a Boolean expression for the control logic into a series-parallel switch network with Boolean variables to control the individual switches.

Consider the series-parallel switch network in Figure 2.5 with three parallel branches of two series switches each, controlled by Boolean variables \(A,\) \(B,\) and \(C.\) We analyze the switch network by transforming it into a single, equivalent switch, deriving the equivalent Boolean expression for the switch control in the process.

Figure 2.6 steps through the transformation process. First, we realize in Figure 2.5 that each of the three parallel branches consists of a series composition of two switches. Hence, we replace each of the series compositions with the corresponding equivalent switch, using an AND operation to form the control expressions \(A\,B,\) \(A\,C,\) and \(B\,C\) for the three branches. Figure 2.6 shows the resulting switch network on the left. Next, we consider the parallel composition of switches \(A\,B\) and \(A\,C.\) The parallel composition has an equivalent switch with an OR operation of the control expressions of the individual switches. The resulting switch network, shown in the middle of Figure 2.6, reduces the two parallel switches into a single switch with control logic \(A\,B + A\,C.\) The equivalent switch for the remaining parallel composition is shown on the right in Figure 2.6. The switch is controlled by the disjunction of \(A\,B + A\,C\) and \(B\,C,\) i.e. Boolean expression \((A\,B + A\,C) + B\,C.\)

Note that we could have combined the parallel switches in the rightmost branches first. Then, we would have obtained Boolean expression \(A\,B + (A\,C + B\,C)\) with a different parenthesization. In fact, the order in which we transform the parallel compositions is immaterial, so that we can drop the parentheses altogether: \((A\,B + A\,C) + B\,C = A\,B + (A\,C + B\,C) = A\,B + A\,C + B\,C.\) Interactive Figure 2.7 enables you to explore the equivalent networks by selecting the values of Boolean variables \(A,\) \(B,\) and \(C.\)

A = 0 B = 0 C = 0 Figure 2.7: Interactive series-parallel switch network (left), equivalent switch (middle), and corresponding truth table (right).

Switches can have different polarities. The uncomplemented switch of Figure 2.1 is closed if control signal \(A=1,\) whereas the complemented switch of Figure 2.2 is closed if control signal \(A=0.\) In a network where all switches under the control of one signal have the same polarity, the switch function is monotone in this signal. For example, if signal \(A\) controls uncomplemented switches only, then the switch function is monotonically increasing in \(A.\) This means that the network cannot connect previously disconnected terminals if \(A\) switches off, i.e. from 1 to 0. To implement any switch function we might be interested in, we generally need switches of both polarities.

Figure 2.8 shows a series-parallel switch network with complemented and uncomplemented switches. We can analyze the network analogous to Example 2.6, by transforming it into an equivalent switch. The resulting control signal obeys the Boolean expression

An alternative method for analyzing a switch network is the systematic enumeration of all combinations of input values of the control signals. For each input combination, we derive the value of the switch function by inspecting whether the terminals are connected or not. In case of Figure 2.8, switch function \(\sigma\) is a function of Boolean variables \(A,\) \(B,\) and \(C.\) The corresponding truth table enumerates all combinations of input values and the corresponding values of switch function \(\sigma(A,B,C)\):

\(A\) \(B\) \(C\) \(\sigma\) 0 0 0 0 0 0 1 1 0 1 0 1 0 1 1 0 1 0 0 1 1 0 1 0 1 1 0 0 1 1 1 1

Each row of the truth table defines one value of switch function \(\sigma(A,B,C)\) for the corresponding input values, that we deduce by inspecting the switch positions of the network. For example, if \(A=B=C=0,\) then the uncomplemented \(A\)-switch in the left half of network is open, so that the left half cannot connect the terminals, independent of the position of the \(B\)-switches and \(C\)-switches. In contrast, the complemented \(A\)-switch in the right half is closed when \(A=0,\) potentially connecting the terminals. However, neither of the lower parallel paths through the series of \(B\)-switches and \(C\)-switches closes if both \(B=0\) and \(C=0.\) Therefore, the terminals are disconnected for input combination \(A=B=C=0,\) and switch function \(\sigma(0,0,0)=0\) in the top row of the truth table. On the other hand, for input combination \(A=B=C=1,\) the three switches in the leftmost path of the network are closed and connect the terminals. Thus, switch function \(\sigma(1,1,1)=1\) in the bottom row of the truth table. The remaining rows can be derived analogously. The truth table specification and the Boolean expression of the switch function of a network are different yet equivalent forms. Both forms specify the 3-input XOR function. We show below how to transform one form into the other.

The truth table method applies to arbitrary switch networks, including non-series-parallel networks which we cannot transform into an equivalent switch by reducing series and parallel compositions. As an example, consider the network in Figure 2.9. This network is well known among electricians, because it is suited to control the lights in a stairway across three levels of a house. Each level has one light switch, for example the basement with control signal \(A,\) first floor with control signal \(B,\) and second floor with control signal \(C.\) Pushing any of the light switches will turn the lights either on or off. Each light switch contains two or four switches. You can add light switches to the network by including additional 4-way switches like the one controlled by input signal \(B\) with four terminals \(w,\) \(x,\) \(y,\) and \(z.\)

We analyze Figure 2.9 by constructing the truth table. Rather than inspecting the network for each of the eight input combinations of control signals \(A,\) \(B,\) and \(C,\) we save inspection effort by identifying only those input combinations that connect the terminals \(u\) and \(v\) and, therefore, have value \(\sigma(A,B,C)=1.\) The remaining rows of the table must have switch function value 0. More succinctly, we determine all simple paths that connect the terminals if all switches on the path are closed. Simple paths are paths that visit disjoint nodes only. The network has these simple paths between terminal nodes \(u\) and \(v,\) and associated values for control signals \(A,\) \(B,\) and \(C\) to close the path and connect the terminals:

simple path A B C \(u \rightarrow w \rightarrow x \rightarrow v\) 1 0 0 \(u \rightarrow w \rightarrow z \rightarrow v\) 1 1 1 \(u \rightarrow y \rightarrow z \rightarrow v\) 0 0 1 \(u \rightarrow y \rightarrow x \rightarrow v\) 0 1 0

The switch network has more simple paths than these four, including path \(u \rightarrow w \rightarrow z \rightarrow y \rightarrow x \rightarrow v.\) However, there exists no combination of control values to close the path, because it contains both complemented and uncomplemented switches controlled by input \(B.\) For example, if we choose \(B=1,\) then \(\sigma_{w,z}(B) = 1,\) but \(\sigma_{z,y}(B)=0,\) and the path does not connect terminals \(u\) and \(v.\) Otherwise, if \(B=0,\) then \(\sigma_{w,z}(B)=0\) and \(\sigma_{z,y}(B)=1,\) and the path does not connect the terminals either.

The network has even more paths between terminals \(u\) and \(v,\) such as path \(u \rightarrow w \rightarrow x \rightarrow y \rightarrow z \rightarrow w \rightarrow x \rightarrow v.\) However, this path is not simple, because it visits nodes \(w\) and \(x\) twice. Furthermore, the path cannot connect the terminals, because it contains both complemented and uncomplemented switches controlled by input \(B.\) To analyze a network, it suffices to find all simple paths between the nodes of interest. More complex paths cannot provide more connectivity information than simple paths capture already.

We translate our path analysis into a truth table by assigning a 1 to all input combinations that represent closed simple paths and 0 to all remaining input combinations. The resulting truth table is the same as for the switch network in Figure 2.8. We conclude that the non-series-parallel network in Figure 2.9 and the series-parallel network in Figure 2.8 implement the same switch function, a 3-input XOR function. The non-series-parallel network uses only eight switches compared to ten switches in the series-parallel network. This is no accident. Non-series-parallel implementations of symmetric switch functions are generally more compact than their equivalent series-parallel counterparts.

2.2.3. Theorems of Boolean Algebra¶

The theorems of Boolean algebra state properties of Boolean expressions in form of equalities. The proof method of choice for these theorems is perfect induction: enumerate all possible combinations of variable values and for each combination verify that the equality holds under the axioms of Boolean algebra.

We introduce the theorems of Boolean algebra starting with those theorems that involve a single Boolean variable \(A \in \mathcal{B}\):

Theorem Dual Theorem Idempotence \(A \cdot A = A\) \(A + A = A\) Complementation \(A \cdot \overline{A} = 0\) \(A + \overline{A} = 1\) Involution \(\overline{\overline{A}} = A\) \(\overline{\overline{A}} = A\)

If you remember one theorem you can apply the principle of duality to derive its dual theorem. Since NOT operators are unaffected by the principle of duality, the involution theorem and its dual are equal. We also denote a theorem as primal theorem when appropriate to emphasize that it is the dual of its dual theorem.

To illustrate the proof method of perfect induction, let us prove the complementation theorem. The complementation theorem states that the conjunction of \(A\) and its complement equals 0. Since Boolean variable \(A\) can assume two values 0 or 1, we construct a truth table with one row per value of \(A,\) and two columns, one for the left-hand side, lhs \(= A \cdot \overline{A},\) and the other for the right-hand side, rhs \(= 0,\) of equation \(A \cdot \overline{A} = 0\):

\(A\) lhs rhs 0 0 0 1 0 0

Since the rhs is equal to constant 0 independent of the value of \(A,\) we enter constant 0 in both rows of column rhs. The lhs of the theorem is a Boolean expression. A rigorous proof invokes the axioms of Boolean algebra to deduce the lhs values in each row. As the first case consider \(A = 0.\) The negation axiom yields \(\overline{A} = 1,\) so that \(A \cdot \overline{A} = 0 \cdot 1.\) Next, apply the identity axiom with \(x=0\) to obtain \(0 \cdot 1 = 0.\) Thus, we enter value 0 in the top row of the lhs column. Analogously, for \(A=1\) the negation axiom yields \(\overline{A}=0.\) Thus, the lhs is \(A \cdot \overline{A} = 1 \cdot 0.\) This conjunction has the form of the annihilation axiom. For \(x = 1,\) the identity axiom postulates that \(1 \cdot 0 = 0.\) Therefore, we enter value 0 in the bottom row of the lhs column. To complete the perfect induction we compare the columns of the lhs and the rhs row by row. Since the values are equal in all rows, we conclude that the equality holds, and the complementation theorem is proven.

When designing digital circuits, the theorems of Boolean algebra can be used to simplify a given circuit by removing gates. For example, the idempotence theorem, \(A \cdot A = A,\) and its dual, \(A + A = A,\) enable us to remove an AND gate or an OR gate such that input \(A\) remains as driver of the output wire:

Similarly, the complementation theorem, \(A \cdot \overline{A} = 0,\) and its dual, \(A + \overline{A} = 1,\) permit removing an AND gate or OR gate, and driving the output with 0 or 1 by tying the wire to GND or \(V_{DD}\):

The involution theorem, \(\overline{\overline{A}} = A,\) tells us that two back-to-back inverters can be removed without affecting the logical function. Simply drive the output wire with the input signal:

The design of a fast digital circuit may require adding rather than removing gates, with the goal to optimize the number of stages of a path. In this case, we can use the theorems of Boolean algebra in the opposite direction. For example, if we have a wire driven by input signal \(A,\) we can add two stages to the path by inserting two inverters. The involution theorem guarantees that the additional inverters do not change the logical function of the circuit.

The theorems of Boolean algebra with two and three Boolean variables \(A, B, C \in \mathcal{B}\) are:

Theorem Dual Theorem Commutativity \(A \cdot B = B \cdot A\) \(A + B = B + A\) Associativity \((A \cdot B) \cdot C = A \cdot (B \cdot C)\) \((A + B) + C = A + (B + C)\) Distributivity \(A \cdot (B + C) = (A \cdot B) + (A\cdot C)\) \(A + (B \cdot C) = (A + B) \cdot (A + C)\) Combining \((A \cdot B) + (A \cdot \overline{B}) = A\) \((A + B) \cdot (A + \overline{B}) = A\) Covering \(A \cdot (A + B) = A\) \(A + (A \cdot B) = A\) Absorption \(A \cdot (\overline{A} + B) = A \cdot B\) \(A + (\overline{A} \cdot B) = A + B\) De Morgan \(\overline{A \cdot B} = \overline{A} + \overline{B}\) \(\overline{A + B} = \overline{A} \cdot \overline{B}\)

It is straightforward to prove all of these theorems by means of perfect induction. As a representative example, we prove the absorption theorem \(A \cdot (\overline{A} + B) = A \cdot B.\) This theorem reduces the size of the left-hand side by absorbing the complement of \(A.\) Since the theorem involves two variables, \(A\) and \(B,\) the truth table for the perfect induction comprises four rows, one for each of the combinations \(A\,B \in \{ 00, 01, 10, 11\}.\) The right-hand side of the theorem, rhs \(= A \cdot B,\) is simpler than the left-hand side, lhs \(= A \cdot (\overline{A} + B).\) Therefore, we prove the lhs in three steps, and include separate columns for subexpressions \(\overline{A}\) and \(\overline{A} + B.\)

\(A\) \(B\) \(\overline{A}\) \(\overline{A} + B\) lhs rhs 0 0 1 1 0 0 0 1 1 1 0 0 1 0 0 0 0 0 1 1 0 1 1 1

Column \(\overline{A}\) negates the values in column \(A\) by the negation axiom. The column for the rhs is derived from the AND operation \(A \cdot B\) that we proved with the axioms of Boolean algebra for \(x=A\) and \(y=B\) already. Subexpression \(\overline{A}+B\) is the disjunction of the values in the column for \(\overline{A}\) and the column for \(B.\) Thus, we rely on the proof of the OR operation with the axioms of Boolean algebra to deduce that \(\overline{A} + B\) is 0 only if \(\overline{A} = 0\) and \(B=0,\) which is true in the third row of the table. Analogously, the lhs is a conjunction of the values in the columns for \(A\) and \(\overline{A} + B.\) We deduce the values in the lhs column by noting that the conjunction is 1 only if \(A=1\) and \(\overline{A} + B = 1,\) which is true in the bottom row of the table. We conclude the perfect induction by noting that the columns for the lhs and the rhs are identical, which proves the absorption theorem.

Perfect induction is a brute-force proof method that works well for theorems with one or two variables. With three variables, the truth table has eight rows already, increasing the chances of error during the manual construction process. The theorems of Boolean algebra empower us to prove the equality of Boolean expressions by algebraic manipulation. This method starts with one side of the equation, and deduces the other side by repeated application of the theorems of Boolean algebra. As an example, we offer an alternative proof of the absorption theorem by means of Boolean algebra:

Using three of the theorems of Boolean algebra and the identity axiom, we have transformed the lhs of the absorption theorem into the rhs. The opposite direction constitutes a valid proof too, because equalities hold independent of the direction of the derivation. With a little practice and a good command of the theorems of Boolean algebra, we can often construct proofs by Boolean algebra that are shorter and clearer than the equivalent proofs by perfect induction.

Prove these theorems by perfect induction:

- combining theorem: \(A \cdot B + A \cdot \overline{B} = A,\)

- covering theorem: \(A \cdot (A + B) = A.\)

Both theorems involve two Boolean variables \(A\) and \(B.\) Therefore, we construct truth tables with four rows, one row per combination of input values \(A B = \{ 00, 01, 10, 11\}.\)

We begin with the perfect induction of combining theorem \(A \cdot B + A \cdot \overline{B} = A.\) The right-hand side rhs \(= A\) is trivial: it is a copy of the column of variable \(A.\) The left-hand side is a disjunction of conjunctions. We introduce three columns for its subexpressions: one column for \(\overline{B},\) and one column for conjunctions \(A \cdot B\) and \(A \cdot \overline{B}\) respectively.

| \(A\) | \(B\) | \(\overline{B}\) | \(A \cdot B\) | \(A \cdot \overline{B}\) | lhs | rhs |

|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 1 | 0 | 1 | 1 | 1 |

| 1 | 1 | 0 | 1 | 0 | 1 | 1 |

Column \(\overline{B}\) is a direct consequence of the negation axioms. In each row where \(B = 0,\) insert the complement \(\overline{B} = 1,\) and where \(B = 1,\) insert \(\overline{B} = 0.\) Column \(A \cdot B\) equals 1 only in the row where both \(A\) and \(B\) are 1, which is the bottom row of the truth table. Analogously, column \(A \cdot \overline{B}\) equals 1 only in the row where \(A\) and \(\overline{B}\) are 1, i.e. in the third row. The left-hand side is the disjunction of the columns for \(A\cdot B\) and \(A\cdot \overline{B}.\) The disjunction is 0 only in those rows where both subexpressions \(A\cdot B = 0\) and \(A\cdot \overline{B} = 0,\) which are the two top rows in the table. Comparing the columns of lhs and rhs, we find that the columns are equal, which proves the combining theorem.

Next, we prove the covering theorem by perfect induction. Again, the rhs is simply a copy of the column of variable \(A.\) The lhs \(A \cdot (A + B)\) is a conjunction of \(A\) and disjunction \(A+B.\) Thus, we introduce a separate column for subexpression \(A+B.\)

| \(A\) | \(B\) | \(A + B\) | lhs | rhs |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 0 | 1 | 1 | 0 | 0 |

| 1 | 0 | 1 | 1 | 1 |

| 1 | 1 | 1 | 1 | 1 |

The disjunction \(A + B\) is 0 only where both variables \(A\) and \(B\) are 0, which is in the first row only. The lhs is 1 only where both \(A\) and \(A+B\) are 1, which is the case in the two bottom rows. We conclude that the covering theorem is proven, because the columns for lhs and rhs are equal.

2.2.4. Normal Forms¶

Equality is an important relation of two switch networks. Given two switch networks, their analysis should reveal unambiguosly whether the networks are functionally equal because they implement the same switch function or not. This knowledge enables the circuit designer to choose the smaller, faster, or less expensive design. For example, the switch networks in Figure 2.8 and Figure 2.9 have the same switch function, because our analysis revealed that both networks have the same truth table. Network analysis by deriving a truth table is essentially a proof by perfect induction. In this section we discuss an alternative style of analysis based on Boolean algebra. In short, every Boolean expression has a unique canonical or normal form. If we are given two Boolean expressions, we can prove their equality by transforming each into normal form. If the normal forms are identical, then the Boolean expressions are equal.

The combining theorems of Boolean algebra are the key to deriving a normal form of a Boolean expression. The combining theorem, \((A \cdot B) + (A \cdot \overline{B}) = A,\) and its dual, \((A + B) \cdot (A + \overline{B}) = A,\) eliminate one variable, \(B,\) from an expression in two variables, \(A\) and \(B.\) Here is the proof of the combining theorem with lhs \(= (A \cdot B) + (A \cdot \overline{B})\) and rhs \(= A\) by perfect induction:

\(A\) \(B\) \(\overline{B}\) \(A \cdot B\) \(A \cdot \overline{B}\) lhs rhs 0 0 1 0 0 0 0 0 1 0 0 0 0 0 1 0 1 0 1 1 1 1 1 0 1 0 1 1

If we use the combining theorem to include rather than eliminate Boolean variables, we arrive at a normal form. Given a set of Boolean variables, and a Boolean expression, we apply the combining theorem repeatedly until each conjunction contains all Boolean variables in complemented or uncomplemented form. This construction process terminates for a finite number of Boolean variables. For example, if we are given a Boolean expression \(\overline{A} + B\) in two variables, \(A\) and \(B,\) we apply the combining theorem to each operand of the disjunction and remove duplicate conjunctions by virtue of the idempotence theorem:

Boolean expression \(\overline{A}\,B + \overline{A}\,\overline{B} + A\,B\) is a so-called sum-of-products normal form, abbreviated SOP normal form, because it is a disjunction (sum) of conjunctions (products), and each conjunction contains all variables either in complemented or uncomplemented form.

The fact that an SOP is unique follows immediately from the associated truth table representation. The truth table below proves the equality \(\overline{A}\,B + \overline{A}\,\overline{B} + A\,B = \overline{A} + B\) by perfect induction.

\(A\) \(B\) \(\overline{A}\,B\) \(\overline{A}\,\overline{B}\) \(A\,B\) \(\overline{A}\,B + \overline{A}\,\overline{B}+ A\,B\) \(\overline{A} + B\) 0 0 0 1 0 1 1 0 1 1 0 0 1 1 1 0 0 0 0 0 0 1 1 0 0 1 1 1

The equality proof itself is not of primary interest here, although it confirms our earlier derivation by Boolean algebra. Instead, we focus our attention on the three columns for the conjunctions \(\overline{A}\,B,\) \(\overline{A}\,\overline{B},\) and \(A\,B\) of the SOP normal form. Observe that each of these three columns contains exactly one 1. All other entries are 0. This is no accident. Each combination of input values in a row of the truth table corresponds to exactly one unique conjunction of all Boolean variables in complemented or uncomplemented form. If the variable value in a row is 1, the variable appears in uncomplemented form in the conjunction, and if the variable value is 0 the variable appears in complemented form. These conjunctions are called minterms. For two Boolean variables there are four minterms, each with value 1 only for the variable values in the associated row:

decimal \(A\) \(B\) minterm 0 0 0 \(\overline{A}\,\overline{B}\) 1 0 1 \(\overline{A}\,B\) 2 1 0 \(A\,\overline{B}\) 3 1 1 \(A\,B\)

If we interpret the juxtaposition of the Boolean variable values as an unsigned binary number, each row is associated with a unique number. The table above includes the numbers in decimal format in the leftmost column. Thus, we may refer to a minterm by its associated decimal number. Then, the SOP normal form can be written as a disjunction (sum) of those minterms for which the function is 1. For example, we may write our SOP normal form with decimal minterm codes as:

The sorted list of minterm codes in the sum identifies the switch function in a syntactically unique form. It lists all rows of the truth table where the function assumes value 1. Two switch functions are equal if and only if they have the same sorted list of minterms. Comparing two lists of minterms is equivalent to comparing two columns of a truth table during a perfect induction.

We conclude that a truth table and a SOP normal form are isomorphic specifications of a switch function. More generally, switch function \(f(x_0, x_1, \ldots, x_{n-1})\) in \(n\) Boolean variables \(x_0, x_1, \ldots, x_{n-1}\) can be specified by means of a truth table with \(2^n\) rows with combinations of variable values in decimal range \([0,2^n-1].\) In the column for switch function \(f \in \mathcal{B}\) we assign either Boolean value 0 or 1 to the value combination in each row. The isomorphic specification of switch function \(f\) by means of an SOP normal form is the disjunction of up to \(2^n\) minterms in range \([0,2^n-1]\) for which \(f\) assumes value 1.

We may derive the SOP normal form of a switch function \(f(x_0, x_1, \ldots, x_{n-1})\) rigorously from a truth table specification by factoring \(f\) about each of the variables with the aid of Shannon’s expansion theorem:

This equation expands \(f\) about variable \(x_0.\) The expansions about any of the other variables look analogously. The expansion theorem tells us that \(f\) can be written as a disjunction of two conjunctions. In the first conjunction, we replace all occurrences of \(x_0\) in \(f\) with constant 0, and AND the resulting expression with \(\overline{x}_0.\) Similarly, the second conjunction substitutes constant 1 for all occurrences of \(x_0\) in \(f,\) and ANDs the result with \(x_0.\) Note that variable \(x_0\) vanishes out of expressions \(f(0, x_1, \ldots, x_{n-1})\) and \(f(1, x_1, \ldots, x_{n-1}).\) We may view Shannon’s expansion as an operation that lifts variable \(x_0\) out of \(f\) into the top-level disjunction. There, \(x_0\) acts like the predicate of the ite function, or the select input of a multiplexer.

Shannon’s expansion theorem is quickly proven by perfect induction, even without constructing a truth table: The Shannon expansion about Boolean variable \(x_0\) can take one of two forms, depending on the value of \(x_0.\) For \(x_0 = 0,\) Shannon’s expansion theorem yields equation:

Otherwise, for \(x_0 = 1,\) Shannon’s expansion theorem states:

Since the equations for each of the two cases are true statements, the theorem is proven.

Now, apply the Shannon expansion to each of the variables of switch function \(f\):

which is the SOP normal form of \(f,\) given the function values for all combinations of variable values.

For example, our Boolean expression \(\overline{A} + B\) specifies a switch function in two variables \(f(A,B) = \overline{A} + B,\) that expands to

We tabulate the evaluation of \(f(A,B)\) for all combinations of variable values to stress the isomorphism with the corresponding truth table:

decimal \(A\) \(B\) \(f(A,B) = \overline{A}+B\) 0 0 0 \(f(0,0) = 1\) 1 0 1 \(f(0,1) = 1\) 2 1 0 \(f(1,0) = 0\) 3 1 1 \(f(1,1) = 1\)

Substituting these function values into the Shannon expansion, we obtain the SOP normal form of \(f\):

Shannon’s expansion theorem is famous in parts because it provides a clean mathematical connection between a truth table and the corresponding SOP normal form.

The principle of duality points us to another normal form, the product-of-sums normal form, or POS normal form for short. We may use the dual combining theorem, \((A + B) \cdot (A + \overline{B}) = A,\) to include all variables of a switch function in each disjunction. Disjunctions that include all variables of a switch function in complemented or uncomplemented form are called maxterms. For example, assume we are given function \(f(A,B) = \overline{A} \cdot B.\) Application of the dual combining theorem and subsequent removal of duplicate disjunctions yields the corresponding POS normal form of \(f\):

The general construction procedure of the POS normal form relies on the dual of Shannon’s expansion theorem:

to expand function \(f\) about all variables:

Given the function values of \(f\) for all combinations of variable values, we obtain the POS normal form. If the function value is 1, then the associated maxterm vanishes from the POS due to the identity and dual annihilation axioms. Otherwise, if the function value is 0, the associated maxterm is included in the POS by virtue of the dual identity axiom.

The maxterms and function values of switch function \(f(A,B) = \overline{A} \cdot B\) are listed in the truth table:

decimal \(A\) \(B\) maxterm \(f(A,B) = \overline{A} \cdot B\) 0 0 0 \(A + B\) \(f(0,0) = 0\) 1 0 1 \(A + \overline{B}\) \(f(0,1) = 1\) 2 1 0 \(\overline{A} + B\) \(f(1,0) = 0\) 3 1 1 \(\overline{A} + \overline{B}\) \(f(1,1) = 0\)

Substituting these function values in the dual Shannon expansion, we obtain the POS normal form:

The product of the sorted list of decimal maxterm codes is a unique normal form of the switch function.

The SOP and POS normal forms of a switch function are logically equal, but are not duals of each other in general. For example, function \(f(A,B)=\overline{A}+B\) has SOP and POS normal forms:

In fact, function \(\overline{A} + B\) is in POS normal form already, because the expression is equal to maxterm 2. Similarly, function \(f(A,B)=\overline{A}\cdot B\) has SOP and POS normal forms: